3.9 PCA

For some sets of models (such as linear regression) highly correlated variables may cause model instability or, perhaps more importantly, lead to wider confidence intervals around model predictions (i.e., how confident we are about our prediction). In these cases, it may help to collapse these variables, while still accounting for the majority of the variance. This is the basic idea behind Principal Components Analysis (PCA). You use PCA to collapse sets of variables into principal components, reducing the dimensionality of your data while maintainingg \(X\) percent of the original variation in the data. Reducing the dimensionality generally has an associated cost of higher model bias. However, it will also nearly always reduce model variability. The proportion of the original variability to maintain can thus be considered a tuning parameter, balancing bias with variance.

Probably my favorite discussion of PCA comes from a discussion on CrossValidated on how to make sense of PCA. The opening poster asked how you would explain PCA to a layman and why it’s needed. The entire thread is worth reading through, and there’s a particularly nice example from JD Long comparing the first principal component to the line of best fit in linear regression.

For our purposes, we’re primarily interested in what proportion of the total variability in the independent variables we should maintain. That is, can we improve our model performance by collapsing groups of (correlated) columns into a smaller set of principal components?

3.9.1 PCA with {recipes}

Let’s move back to our full dataset. Our final recipe looked like this.

rec <- recipe(score ~ ., train) %>%

update_role(contains("id"), ncessch, new_role = "id vars") %>%

step_mutate(lang_cd = factor(ifelse(is.na(lang_cd), "E", lang_cd)),

tst_dt = lubridate::mdy_hms(tst_dt)) %>%

step_zv(all_predictors()) %>%

step_dummy(all_nominal())To conduct PCA we need to make sure that

- All variables are numeric

- All variables are on the same scale

- No missing data

Our recipe above ensures all variables are numeric, but it doesn’t handle missing data, and there is no standardization of variables. Let’s redo this recipe to make sure it’s ready for PCA.

rec <- recipe(score ~ ., train) %>%

step_mutate(tst_dt = lubridate::mdy_hms(tst_dt)) %>%

update_role(contains("id"), ncessch, new_role = "id vars") %>%

step_zv(all_predictors()) %>%

step_unknown(all_nominal()) %>%

step_medianimpute(all_numeric(), -all_outcomes(), -has_role("id vars")) %>%

step_normalize(all_numeric(), -all_outcomes(), -has_role("id vars")) %>%

step_dummy(all_nominal(), -has_role("id vars"))The above is not a whole lot more complex than our original recipe. It just assigns an "unknown" level to the nominal variables and imputes the remaining numeric variables with the median of that variable. It then normalizes all variables (center/scales) and dummy codes the nominal variables.

This recipe gives us a data frame with many columns. Specifically

## [1] 79Let’s create a recipe that retains 80% of the total variability with a smaller set of principal components.

Notice that we just have to specify the threshold of variance we want to maintain. How many columns do we have now?

## [1] 10We’ve dramatically reduced the dimensionality in the data, while still retaining 80% of the total variability.

An alternative method is to specify the number of components we want. For example, let’s extract only the first five components.

I generally prefer the former because, if we’re thinking about PCA through a predictive modeling framework, we’re probably less concerned with the number of components and more concerned with the variation they represent.

So how do we know how much of the variabilty we should retain? This is a difficult question, but sometimes plots of the principal components can help. Let’s look at the five components we just extracted. If we prep the recipe, you’ll see there’s some extra information we can access.

## [1] "var_info" "term_info" "steps" "template" "retained" "tr_info"

## [7] "orig_lvls" "last_term_info"If we access the steps we can get additional information. The PCA step is the seventh, and it has the following elements stored in the list.

## [1] "terms" "role" "trained" "num_comp" "threshold" "options" "res" "prefix" "skip"

## [10] "id"Let’s look into res

## [1] "sdev" "rotation" "center" "scale"And now we get what we’ve been (perhaps unknowingly to you, dear reader) looking for, the sdev object, which lists the standard deviation of each principal component. We can look at how much variance each component accounts for as follows

## [1] 7.498550e-01 5.139686e-02 4.960709e-02 4.268070e-02 2.302411e-02 1.165046e-02 8.506419e-03 7.885661e-03

## [9] 7.404347e-03 7.191037e-03 5.686267e-03 4.941382e-03 4.148856e-03 3.963122e-03 3.364723e-03 2.556973e-03

## [17] 2.300300e-03 1.796940e-03 1.685488e-03 1.558769e-03 1.456765e-03 1.266593e-03 1.097991e-03 9.865998e-04

## [25] 8.195242e-04 7.406596e-04 6.539508e-04 5.075284e-04 3.892019e-04 2.779546e-04 2.049425e-04 1.721559e-04

## [33] 6.746958e-05 5.230658e-05 3.378346e-05 2.927400e-05 1.813925e-05 1.501099e-05 5.159161e-06 4.933063e-07

## [41] 1.173255e-31 2.971671e-32 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33

## [49] 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33

## [57] 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33

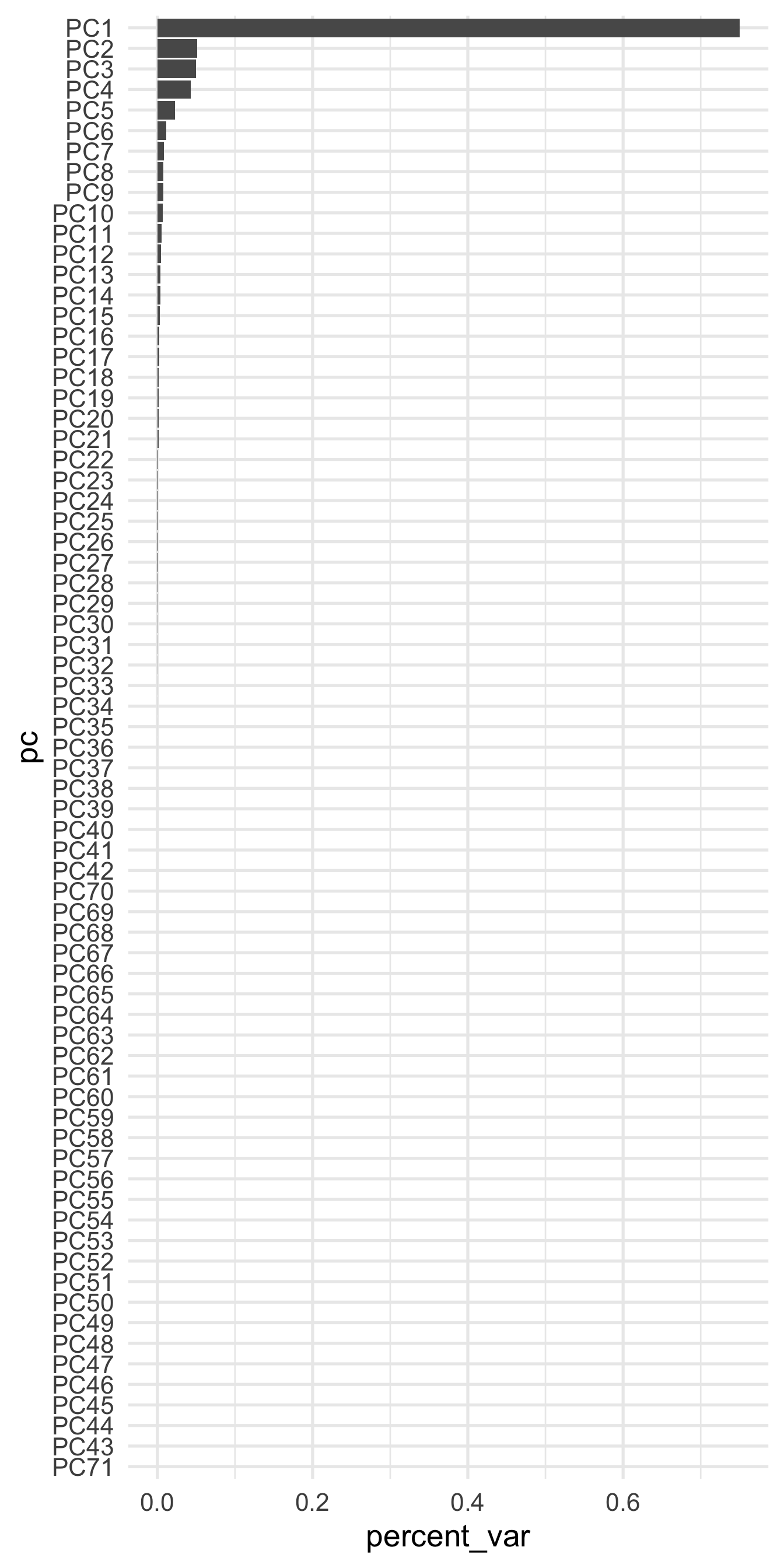

## [65] 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33 5.980014e-33 2.934497e-33That’s difficult to read. Let’s plot it instead

pcs <- data.frame(pc = paste0("PC", 1:length(pvar)),

percent_var = pvar) %>%

mutate(pc = reorder(factor(pc), percent_var))

ggplot(pcs, aes(pc, percent_var)) +

geom_col() +

coord_flip()

A few things are of note here. First, it appears the majority of the variance is accounted for by our first few components, which is not altogether surprising given that we already new the first 10 components accounted for approximately 85% of the total variability. However, you might also wonder why there are so many freaking components shown?! I thought we only asked for five!? That is true. But the model actually estimates all the components, and then just pulls out whatever we ask, based on either the threshold or the num_comp arguments. For example, let’s bake the five component recipe on the full training data.

## # A tibble: 2,841 x 13

## id attnd_dist_inst… attnd_schl_inst… tst_dt partic_dist_ins… partic_schl_ins… ncessch score

## <dbl> <dbl> <dbl> <dttm> <dbl> <dbl> <dbl> <dbl>

## 1 154420 2057 481 2018-05-29 00:00:00 2057 481 4.11e11 2669

## 2 248739 1978 264 2018-05-14 00:00:00 1978 264 4.11e11 2500

## 3 126093 2243 1160 2018-05-30 00:00:00 NA NA 4.10e11 2377

## 4 225721 2180 834 2018-05-30 00:00:00 2180 834 4.11e11 2353

## 5 110026 2183 942 2018-06-05 00:00:00 2183 942 4.11e11 2504

## 6 149751 2084 563 2018-05-18 00:00:00 2084 563 4.10e11 2508

## 7 116315 2206 3426 2018-05-18 00:00:00 2206 3426 4.11e11 2448

## 8 145793 2180 883 2018-05-09 00:00:00 2180 883 4.11e11 2650

## 9 73617 1976 5292 2018-05-24 00:00:00 1976 5292 4.10e11 2656

## 10 226521 2043 394 2018-04-26 00:00:00 2043 394 4.10e11 2489

## # … with 2,831 more rows, and 5 more variables: PC1 <dbl>, PC2 <dbl>, PC3 <dbl>, PC4 <dbl>, PC5 <dbl>And as expected, we get only the five components we requested.

PCA reduces the dimensionality in the data, which can help guard against overfitting. However, it also may introduce a small amount of bias. Plots like the above can help determine how many components we should retain because we can see that, for example, after about 20 components or so we’re getting almost nothing in return. If you are using PCA and your model still seems to be overfitting, try reducing the dimensionality more (extracting fewer components). On the other hand, if you do not appear to be in danger of overfitting, you might try extracting more components to help reduce bias.